Freddie deBoer's False Binary

AI is a spectrum.

Freddie deBoer issued a challenge to Scott Alexander. Scott first claimed that AI would become AGI by 2027, then 2029… now it’s 2036.

Freddie asks a fair question: why should we trust maximalists if they keep changing goalposts and timelines? If they keep getting things wrong, maybe they need to humble themselves and give credit to people like Freddie who never bought into the hype train.1

Where I take issue with Freddie is not in his rebuff of maximalism, but in his econometric standards. To determine whether or not AI is humanity-altering, he uses:

unemployment rate, GDP, equity values, inflation, profit margins, market caps, wages, and income inequality.

He then invites folks to criticize his metrics.

So that’s what I did, in a pithy, off-handed way, calling them a bit ridiculous. But that wasn’t very useful of me.

Apologies.

As a writer, I put a lot of work into my ideas. Sometimes I am wrong. However, there is an inherent inequality between my level of effort and the effort of my critics. Sometimes, I receive criticisms that are so vague that there’s nothing I could possibly learn from them. These range from racial slurs to passive aggressive snark:

This is, unfortunately, how most internet discourse works: you work really hard to cover all your bases, and then someone comes along and does a drive-by, hit-and-run attack… Normally, this doesn’t go anywhere.2

After seeing Freddie’s frustration at my comment, I realized that I was too pithy and not helpful. I am part of the problem. He deserves a more detailed explanation of my criticism.

What’s normal?

Freddie’s benchmark of 18% unemployment is a poor (if well-intentioned) test of the impact of AI, for two reasons:

There is nothing normal about 18% unemployment;

AI can be extremely impactful without resulting in high unemployment.

How is that possible? According to Nicholas Decker:

Please do not take studies which show small labor market effects of AI, and infer that this means that AI is doing nothing to increase productivity. This is incorrect. Labor market impacts are not able to capture the full benefit of AI, even in the long run.

My own economic projection for unemployment is as follows:

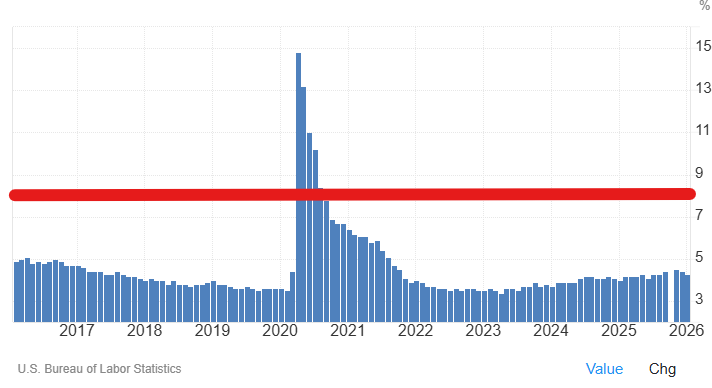

A 50% increase in BWC-STEMPS (Bachelor-degree White Collar STEM Private Sector) unemployment will result in a 4% increase in overall unemployment.

Not AGI; not the end of the world; not the collapse of civilization. Still, 8% unemployment would be the highest unemployment in memory outside of COVID.3

The Econometric Fallacy

To give Freddie credit, he made a good faith effort to measure the impact of AI based on popular conceptions of econometrics. I do not believe he was playing 5D chess, using poor measures to artificially pump his chances of winning a bet. But I will call this the econometric fallacy.

While I agree with Freddie that we should not overestimate the impact of AI, I do not think that his econometric standards work the way he thinks they work. To be fair, he seems to be humble about this — I’m writing in the spirit of education rather than BTFO. Furthermore, I don’t think AI maximalists understand this point either, because they regularly project insane statistics about unemployment and GDP growth.

The econometric fallacy is when you judge the impact of something specific according to extremely general econometrics over a very small amount of time. For example:

We’ve had a 2% growth rate no matter what; therefore, innovation doesn’t matter.4

Or another one you will see more frequently: Canada/Britain didn’t have amazing economic growth despite immigration within this five year period; therefore we know that mass immigration is inherently harmful.

Or in this case: if unemployment, GDP growth, and wages don’t radically shift over the next three years, we know that AI is not a world-altering technology.

Populists love the econometric fallacy, which they then use to undermine economics as a discipline. By misusing econometrics, they can say, see? economists don’t know anything, and then promote tariffs, closed borders, and price controls.

Populists use catch-phrases like, we’re a nation, not an economy. Eventually, they progress to dismissing economic growth itself as superficial or meaningless and prefer to measure quality of life in hamburgers and baseball games.

These arguments are shallow and superficial. They fail to take into account how complex economies actually function.

I’m hoping that the arguments I make here will help make clear why the econometric fallacy is wrong. We do not need to fall into a binary of nothing-burgers and techno-panic.

Techno-Panic

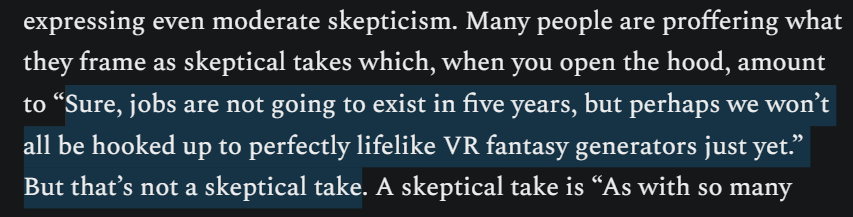

From Freddie’s perspective, our collective Overton Window of AI skepticism is extremely skewed. The maximalists are presented as reasonable, and the so-called skeptics are really just moderate maximalists in disguise.

I have sympathy for Freddie, because when I log into Twitter, I see a million posts promoting Epstein conspiracies. Many of these people are antisemitic Epstein maximalists. They believe Epstein was a Jewish supremacist cannibal toddler-rapist who blackmailed the global elite on behalf of Mossad.

Juxtaposed to this group, you have the so-called Epstein moderates. These are people who frame themselves as skeptical, but end up performing a subversive function. Rather than actually applying real skepticism, they merely moderate the maximalist claims.

Epstein wasn’t a toddler-rapist; but we know he was raping 14 year olds.5

Epstein wasn’t blackmailing people for Mossad, but we know he was blackmailing people for other purposes.

Epstein wasn’t a cannibal, but we know he was pure evil.

I could go on and on in this manner — people offering up no evidence at all for their claims, but framing themselves as skeptics in opposition to the most extreme maximalists. If you push back on these claims about evil rape and blackmail, they will tell you that you are the extremist — the burden of proof is shifted onto the skeptics to prove their skepticism.

Freddie encounters the same problem in AI discourse. Maximalists claim that AGI is coming in 2027 — 90% of jobs will disappear. On the other hand, so-called moderates will say, well, 90% is too much, but we’re still going to lose 50% of jobs. This false dichotomy squeezes out real skeptics, like Freddie. And if 50% is the cut-off point, then put me on the side of Freddie and the skeptics.

When I criticize Freddie for using the 18% unemployment figure as a test of AI impact, he assumes I am another hypocritical AI maximalist who loves Scott Alexander and promotes the singularity.6 But I’m not. I’m trying to point out that there is a vast difference between maximalism, reasonable skepticism, and dismissive skepticism.7

I invite AI skeptics to stop thinking in terms of an AI binary, where it’s all-or-nothing.

What is maximalism?

Freddie gives us five claims from the maximalist camp:

the demise of human reasoning

a post-work economy

exponential economic growth

distributed intelligence drone swarms

[and] Skynet launching the nukes to rid the world of human presence.

I don’t believe in any of these things. I am a big believer in human reasoning; biological beings will perform useful labor for centuries to come; I reject exponential economic growth; I am a drone swarm skeptic; and I do not foresee AI launching nukes to eliminate Homo Sapiens.

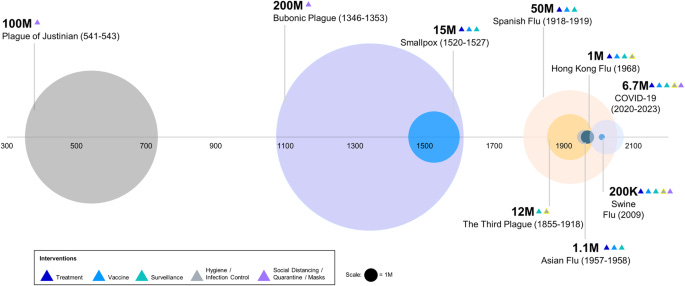

However, I still believe that AI will have a world-altering impact, in the same or greater magnitude as nuclear technology, railroads, the printing press, the radio, the telephone, the highway system, or the internet.

If you don’t consider any of these technologies to be world-altering, then your definition is far too stringent. At that point, we need to have a discussion of whether any technology has ever fundamentally altered the world. That’s not economics — that’s philosophy.

AI can be transformative without being mystical. It can be impactful without being supernatural.

Specifically, world-altering technology does not necessitate massive unemployment. We know this to be true from every technological leap in history: Nuclear power, railroads, telephones, television, radios, and the printing press all had relatively weak (short-term) effects on GDP, unemployment, and inflation within the first 5-10 years of their implementation. Yet all of those technologies were world-altering.

False Binaries

I agree at the highest level with Freddie. AI will not end the human species; it will not replace all jobs; it will not take over all governments; it will neither create utopia nor will it lead to extinction.

But when I apply skepticism to Freddie’s econometric tests, he addresses me as you guys.

Freddie has fallen into a binary. Either AI is a nothing-burger, and it will have no effect whatsoever, or the maximalists are correct, and 90% of jobs will disappear next year.

Both are wrong.

Freddie wants to guide the conversation in a more productive direction by forcing the maximalists to make precise econometric predictions.8 My (friendly) critique is that GDP is much better than his suggestions.

Does the internet matter?

Has the internet forever changed the fundamentals of human existence? That’s a subjective question, with numerous interpretations. But at the very least, we should admit that the answer is ambiguous. How do we objectively measure the fundamentals of human existence?

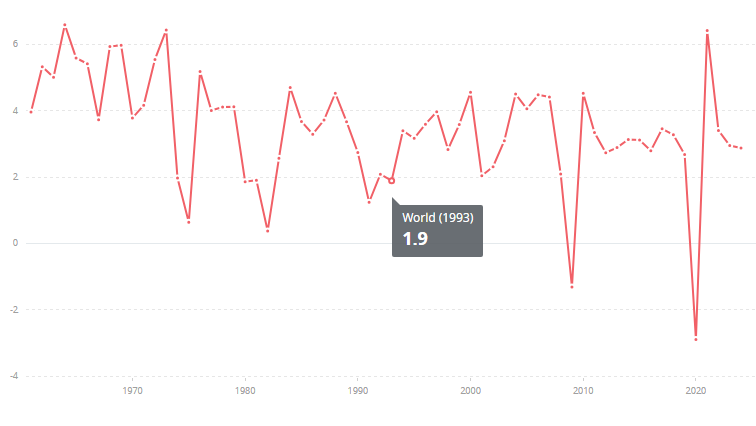

One measurement is GDP growth. Has the internet meaningfully changed the growth rate of the global economy? Unfortunately: No.

Let’s rephrase the question: has the internet changed the fundamentals of the global economy? Yes. But has it changed GDP growth, unemployment, inflation, profit margins, or income inequality? Not really.

How is this possible? If the internet causes economic changes (we all use it every day), why doesn’t it show up in the data? Where the hell are all the gains going?

There are three ways to look at this:

1. DEMONIC PORTALS:

Perhaps the internet makes life much more efficient, but it also rots our brains, destroys our social lives, and causes mass crippling porn addiction, anxiety, and depression. If you’re a Christian, maybe the internet is a demonic portal that Satan uses to distract us from God. All together, maybe the negative effects of internet usage perfectly offset the positive effects, leading to a net economic effect of exactly zero.

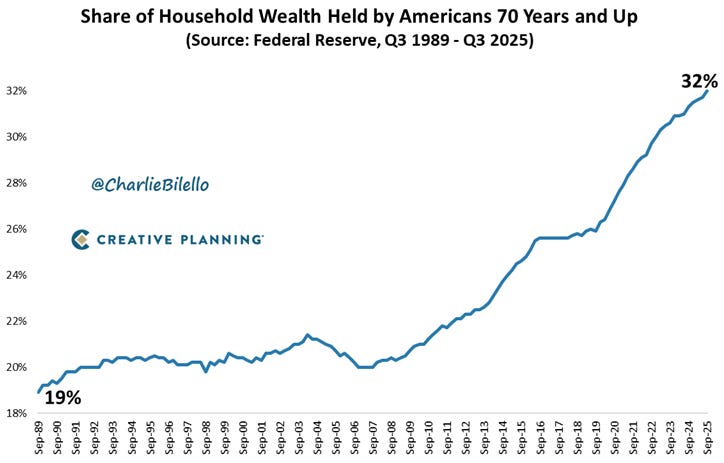

2. GERONTOCRACY:

As I argue in my article against Rob Henderson, conservatives are the party of gerontocracy, and Democrats have no interest in opposing them. Between these two, we are rapidly redistributing resources from productive workers to useless parasites. As a result, the gains from the internet are being gobbled up by hungry hippos just as fast as they can be produced, creating an equilibrium growth rate of 2-3%.

If we could roll back the gerontocracy to the halcyon days of 2008, we’d see much higher growth rates. But the spooky stability of this equilibrium can be mostly explained by the cliodynamics of democracy.

If the gerontocracy gets too bad, it will self-correct and self-limit; but if growth goes too high, the gerontocracy gets greedy and gobbles it up. Fundamental growth has been occurring under the hood, but it is being eroded by increasing gerontocratic inefficiency, so it doesn’t show up in the data.

3. POOR ACCOUNTING:

As Nicholas Decker argues in his article, Labor Market Impacts Are Not the Same As Welfare Impacts, economic growth is not a perfect measurement of welfare growth.

This argument requires a bit more explanation than the previous two.

The term welfare here is not being used to mean food stamps, but good stuff. As Decker argues in a related article:

The trouble is that “economic growth” is not really one thing. It consists both of expanding our quantity of units consumed for a given amount of resources, but also in expanding what we are capable of consuming at all. Take the television – it has simultaneously become cheaper and greatly improved in quality. One can easily imagine a world in which the goods stay the same price, but greatly improve in quality.

The year is 1956, and you own a black-and-white television which costs $100. Fast forward to 2026, you now own a color television which costs $100. From the point of view of econometric measurements, nothing has changed: you own the same number of TVs at the same price. However, clearly, something has changed: the quality of products is vastly improved.

Similarly, technologies like the internet and AI can vastly improve the quality of goods and services, but without any necessary impact on wages, unemployment, inflation, or GDP growth.

To really drive the point home, Decker lists many of the ways in which life has gotten better that are not necessarily represented in econometric data:

There are lots of ways in which work has gotten more pleasant. We lift less, and engage in less physically arduous labor; we have air conditioning; we have phones and music; we have the ability to skip commuting and call into the office; we might have more accommodating sick leave policies; we can drive to work. AI is another improvement in pleasantness – it is so much easier to have something else do the rote thinking of writing emails and blog posts.

The Gooning Surplus

In the demonic portal theory, I joked that perhaps the economic gains from internet usage were being cancelled out by wide-spread porn addiction. If men spend hours a day gooning instead of eating healthy, working out, or improving their skills, we might expect economic productivity to fall as a result. A population which is sad, depressed, and socially isolated will probably have worse productivity.

However, let’s flip that argument on its head: let’s stop judging goods based on subjective or moral preference, but based on revealed preference. According to the Bible, masturbation is dirty, nasty, and will send you straight to hell. But according to revealed preferences, masturbation is a highly enjoyable hobby.

Rather than viewing porn addiction as evidence of an economic deficit, we could view it as a leisure activity. Even if a good becomes free, that does not mean that its economic value is zero.

As Nicholas Decker puts it:

We would like to know how much AI is increasing our productivity, but if the productivity increase is coming through people having a more pleasant time on the job and working less, we will not be able to pick it up.9

I know this point might be lost on some people, so I’ll try to explain it by the converse:

Fixing the Apocalypse

Let’s say that in some future post-apocalyptic dystopia, the air was polluted, and in order to breathe pure air, we all needed to refill our oxygen tanks on a daily basis. This cost us $100 per day, for a total cost of $800 billion per year. Then, imagine that a government invented a way to purify the air, which cost $8 trillion dollars. This program would pay for itself after 10 years.

However, once the program was finished, we would all benefit from clean air forever. Hence, the benefit of clean air would continue to be $800 billion per year, but no one would bother measuring this in econometric data — we would simply fail to account for this at all.

In many ways, this is the world we live in. Prior to air conditioning, we were living in an extremely unpleasant world, which had huge daily costs, and led to heat stroke deaths. Now we have cheap and plentiful air conditioning, but we don’t take that into account when we measure economic growth. We just take it for granted.

By the same token, the revealed preference of porn users shows that porn is a highly valuable commodity, even if it is free. It might also be highly immoral and send you straight to hell, but from an economic point of view, immoral things like cocaine or prostitutes still have a price — they are products in the black market. If we’re concerned with net economic change, for good or for evil, we have to consider the fact that free or cheap does not equal inconsequential.

In 2012, companies lost $16.9 billion in revenue from employees using work computers to watch porn. One way to view this statistic is as an economic loss in productivity. Another way is to view it as an increase in leisure time among employees. However you view it, for good or for evil, it is most definitely a change brought on by the internet. But this change would never show up if you were just looking at the big KPIs like inflation, GDP, or unemployment.

This is the problem with Freddie’s wager. He’s trying to measure the impact of AI — great! I endorse his skepticism of Scott Alexander, and all other AI maximalists. But absence of economic evidence is not evidence of economic absence. Technology can still be transformative even while all the economic vital signs remain within a normal range.

Freddie’s approach ignores what we know about transformative technology. Rather than asking what AI will do to unemployment, GDP, or inflation, we should be asking:

What percentage of workers will use AI?

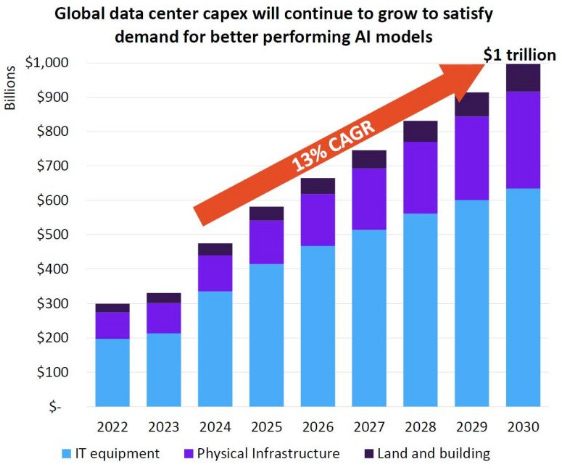

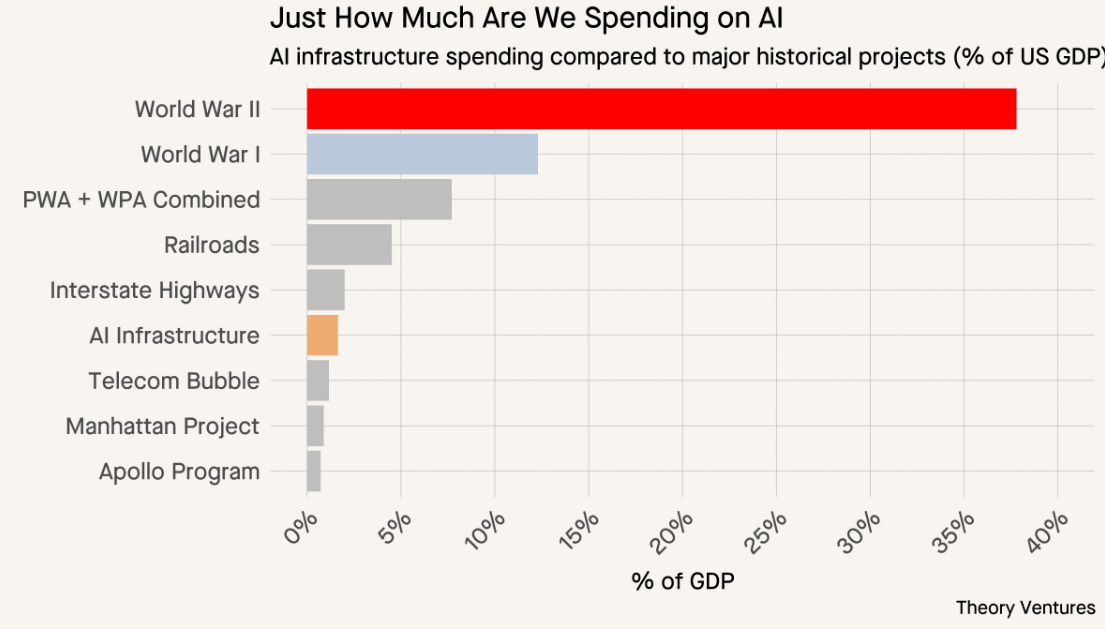

What percentage of GDP will be dedicated to AI data centers?

What percentage of the electrical grid will be dedicated to AI data centers?

Roughly, here’s where things stand today, globally, at minimum:

15% of all workers use AI;

<1% of global GDP is dedicated to building and maintaining AI data centers;

1.5% of all electricity in the world is dedicated to data centers.

My challenge to Freddie is this: what percentages would you declare to be transformative? Is there any number that you would consider world-altering? That’s how we should make a bet — based on direct impact, not macro KPIs.10

The two most direct measures of AI’s impact is on the percentage of GDP and electricity dedicated to data centers. I predict that these will double by 2029 — would Freddie take that bet?

Fiber Optics

Freddie expresses some vague philosophical feelings about human mediocrity.

According to Freddie, nothing ever happens. I’ll concede that I am not prepared in this essay to challenge that Deep Right ontology of the stagnant human condition.11

However, putting aside the spiritual mumbo jumbo about emotions and feelings, in material terms, things happen.

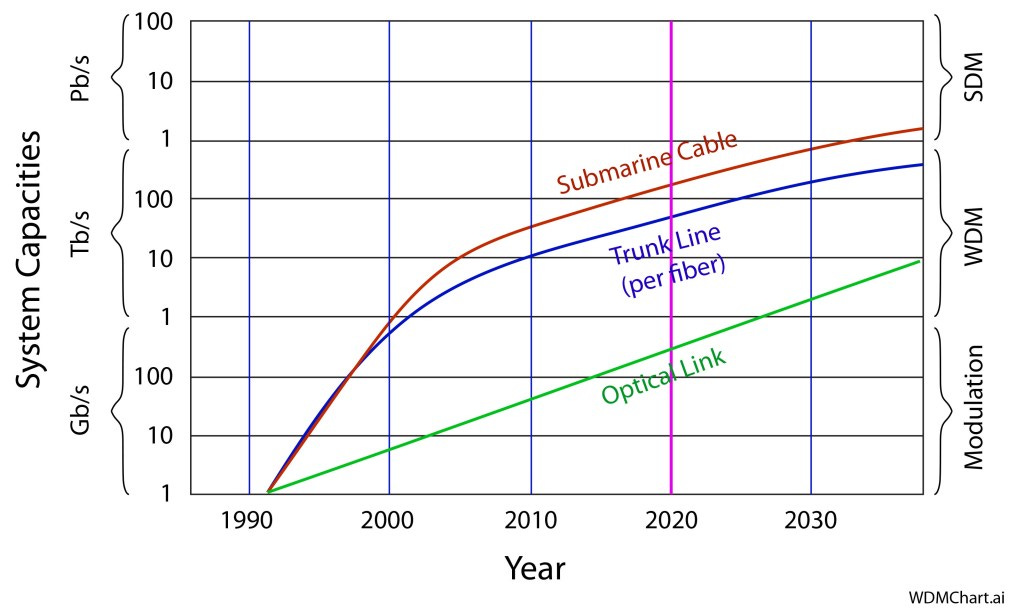

Let’s examine Fiber Optic cables, which were first implemented commercially in 1977. The total value of all fiber optic cables in the world is currently around $2 trillion dollars. The majority of these cables were laid between 1993 and 2010.12

AI is an order of magnitude more significant. By 2029, I bet that we will be spending more on yearly AI CAPEX and maintenance costs than we have spent on all existing fiber optic cables. That’s 23 years of investment blown out of the water in a single year. Kind of a big deal!

This is the fundamental question we should be asking. Not about GDP, unemployment, or inflation, but:

What percentage of the global economy will be dedicated to data centers?

We’re currently around half a trillion dollars, globally. By the end of 2029, I predict $5 trillion globally (inflation adjusted) will be spent on the construction and maintenance (including electricity) of AI data centers. Will Freddie take that bet?

Or, instead of cash, let’s talk about percentage of GDP.

Would Freddie admit that the World Wars, the New Deal, railroads, and the interstate highway system were transformative? Is he willing to bet against data centers making up 5% of the American economy by 2029?

My point here isn’t to force Freddie into a losing position. I don’t want him to bet against me! Please, keep your money! Optimally, I’d like him to reconsider his position and agree with me that my predictions are reasonable.

I am not trying to make him into an AI maximalist, or an AI doomer. My intention is to point the way out of this false maximalist-minimalist binary. Realistic projections for AI can still result in transformative material impacts.

I agree with Freddie on the need to apply skepticism to science fiction quackery and New Age secular spirituality. Drone swarms, eternal life, uploading consciousness, and zero jobs are all fantasies. But touching grass doesn’t require improper econometric measures. Unemployment, inflation, and GDP are not good tests of innovative significance. Percentage of GDP is better.

Against the Maximalists

Now that I’ve thoroughly made my case against AI minimalism, I want to briefly address the maximalist case before we close up shop.

I freely speculate about the past. But there’s a huge difference between historical revisionism and futurology.

When we revise our view of the past, the implications for the present-day are second-order at best. Archaeology and mythology have little bearing on taxes, regulations, or legal codes, but AGI is (supposedly) an emergency which demands our full attention.

If the AGI maximalists are wrong, the costs are quite large:

They are inflating an equity bubble. This is true, by the way, even if my predictions are correct: AI can grow even if the stock market crashes, just as the internet grew during the stock market retraction of 2000-2002.

We are risking giving credence to populists on the right like James Fishback, Tucker Carlson, and Steve Bannon, all of whom demand regulations against AI. Even if AGI is fake, regulations are real, and will have a terrible opportunity cost.

AI maximalists claim that AGI is an extinction-level event. This is a secular Pascal’s Wager: hell is so terrible that it is better for you to suspend your judgment and embrace Christianity. But the problem with Pascal’s Wager is that there are multiple religions with similar claims. I could argue that regulations on AI and biotech are even more catastrophic — hence, the AGI maximalists are dooming humanity with outlandish predictions that only encourage regulatory backlash.

AGI is too loosely defined, too fantastical, and too abstract. I am wary of anyone claiming that Epstein was trafficking children out to billionaires, because extraordinary claims require extraordinary evidence. Similarly, anyone talking about AGI needs to be extremely rigorous in their definitions.

Are LLMs Generally Intelligent?

Artificial general intelligence refers to an intelligence as smart (or even smarter) than the average person, which can perform high-level tasks just as fast (or even faster), at a fraction of the cost. But if that’s how we define AGI, then we could declare victory today: there are already some tasks which AI can complete faster, more consistently, and more cheaply than any human agent.

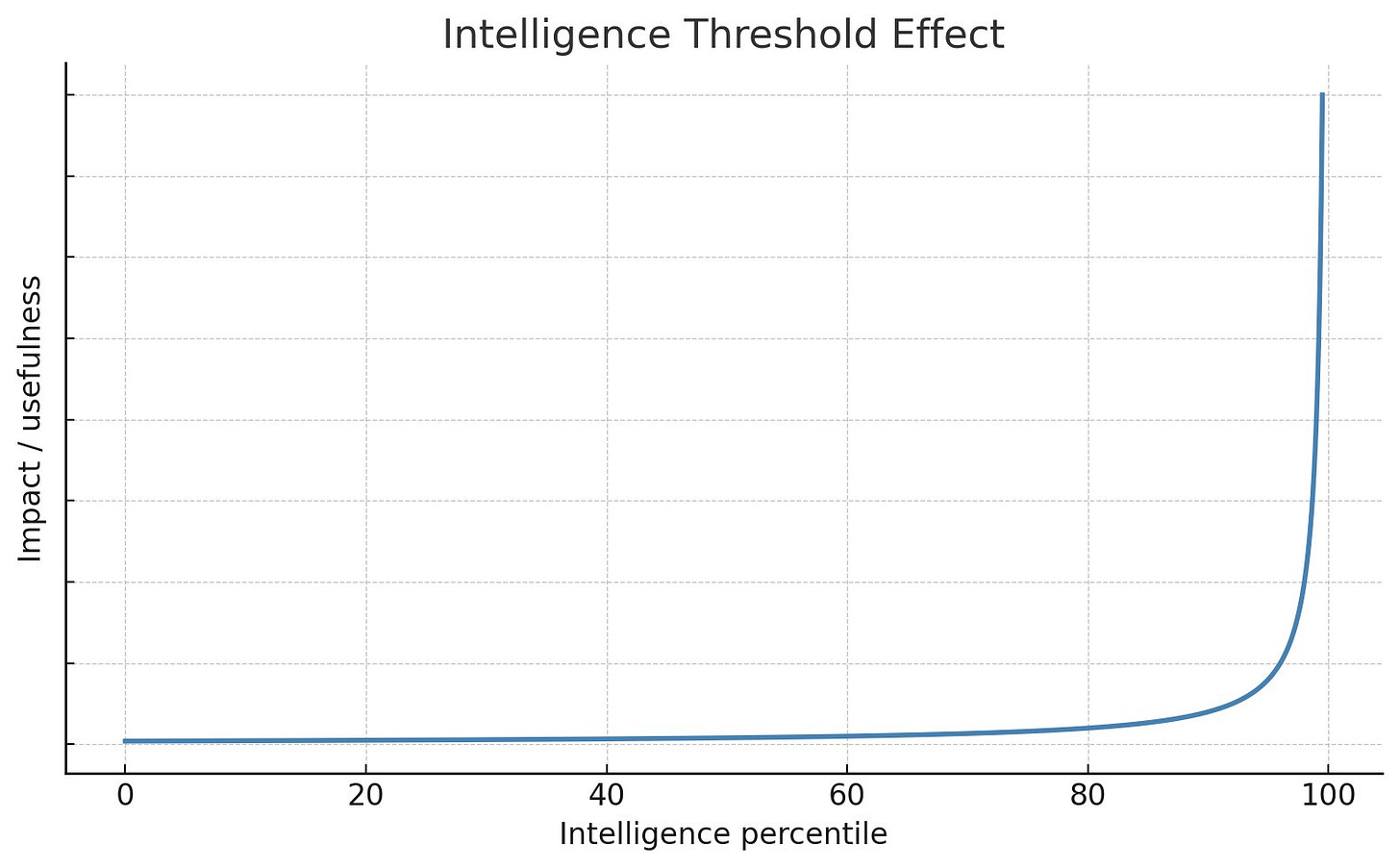

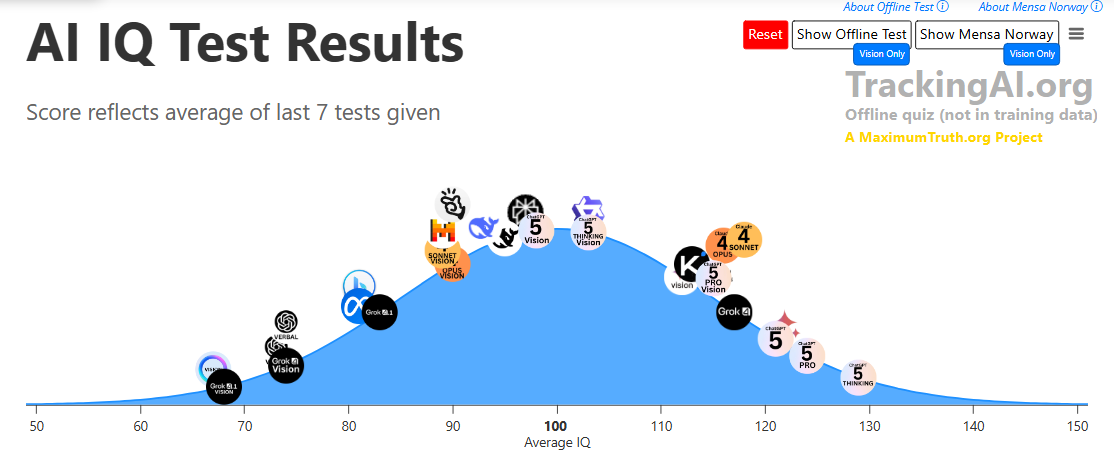

The weasel word here is general. What does it mean to be generally intelligent? Ostensibly, it means that AI will be able to perform every single cognitive task at a level equal to or better than a human agent. That is a fundamentally different question, and we can’t simply measure progress toward general intelligence by feeding AI IQ tests and seeing how it performs.

For example, there is a website which claims that ChatGPT has an IQ of 129. If this were true, why hasn’t every single cognitive work-from-home job requiring less than 129 IQ been already replaced by AI? Since this has obviously not occurred, the claim that ChatGPT has an IQ of 129 is deceptive.

AI might be able to perform at a level similar to a 129 IQ person on certain tasks, like an IQ test. But it is not emulating economic productivity. The intelligence quotient is not identical to the productivity quotient. The rising IQ of AI is not 1:1 with a rising PQ.

What is an IQ test?

IQ tests are designed to test human intelligence. They are not worthless, but they are not perfect either. Feeding IQ tests to AI and using its answers to judge its general intelligence does not translate into direct economic productivity.

First of all, IQ only explains 25% of the variance in productivity and lifelong income. 75% is something else entirely — personality traits, sociability, initiative, aggression, competitiveness, and creativity. Maybe even some of the spiritual stuff that Freddie gestures toward.

Maximalists claim that AI will surely be able to do all these human-like things — but they haven’t proven it. Even if AI is as smart as the maximalists claim, this doesn’t tell us anything about the other 75% of economic productivity. AI might be 4x as smart as the average human, but no more economically productive.

In humans, IQ correlates with all sorts of other good things: happiness, mental health, and physical strength. But this correlation isn’t necessarily causal.

To give an example:

If you buy a new car, it’s likely that the engine is healthy, but also, that the radio works too. On the other hand, if the engine is broken, there’s a higher likelihood that the radio is broken too.

This is because the underlying causes of decay (time) affect all structures of the car simultaneously. But this is a correlation, not a causation. Having a good engine does not cause a good radio, nor does a bad engine cause a bad radio.

Similarly, as AI advances its IQ, it won’t necessarily develop all the human traits which make us economically productive. Agency, instinct, or common sense might be entirely different structures, as different as a radio is from an engine. Thus AI might never reach the level of autonomy that the maximalists expect — or at least, it will take much longer than they project.

There’s also a serious question here regarding costs. Even if we are able to build a supercomputer which has human-like intelligence, what will it cost to run and operate? If the AI costs $1 more than the human worker, the AI will be functionally useless.13

AI already outperforms humans in many different tasks — but AGI implies that AI will outperform on every single task. The evidence points to the contrary. AI is a specialized tool, an assistant, not a generalized autonomous human-like intelligence. It will rapidly and transformatively reduce the need for human workers in certain sectors, but it cannot eliminate them entirely.

Conclusion

AI is, as we speak, wiping out white collar jobs; I observed this happen in real time (hence I now write Substack articles full time instead of working my old excel spreadsheet job). But even as AI takes some jobs, it creates others. AI could theoretically take 90% of present-day jobs, but if it creates just as many jobs as it takes, then unemployment would not change at all.

This is why I am critical of Freddie’s econometric standards. Technology changes the world — but it’s not so easy to measure. If we apply Freddie’s standards to any of the biggest inventions of all time, each one of them would fail to pass his thresholds over the timeframes he has prescribed.

This isn’t to say that GDP is useless as a measure; I’m the biggest GDP shill of them all. It just doesn’t measure everything; even really big things, like world-shattering innovations.

Vaccines, fertilizer, gunpowder — none of these resulted in mass unemployment, or massive 5-10 year gains in GDP, or any immediate changes at all. To the extent that we can measure gains from civilizational-level innovations, it is over the course of decades.

If that’s Freddie’s argument, then we broadly agree. Where I disagree is that nothing cool is going to happen.

While I am skeptical of the maximalists, the AI scam is empirically false. AI already works; it steals jobs (and makes new ones), and it will be more impactful than COVID or the China shock, in the long-term.

Sometimes people write articles absolutely scorching me to death, and while I certainly do get annoyed when I am deliberately misrepresented and strawmanned, at the end of the day, on an emotional level, I prefer someone taking the time to crash out at me with a long list of bad arguments than leaving vague and unhelpful comments.

Even if someone misrepresents my views, that is somewhat useful: now I know where I need to be more clear and emphatic, so that my detractors look more silly and detached from reality.

But unfortunately, most of my haters don’t write long articles exposing me. It’s just a bunch of snarky, unhelpful one-liners.

COVID was also not normal, it was a once-in-a-century pandemic. Someone in 1935 predicting a second Great Depression would not call it normal. This is a semantic quibble on my part, but that’s not how normalcy works.

The term statutory rape confuses this issue. Given that we define statutory rape differently in different states, and the federal government is evidently cool with the age of consent being 16, I regard 16 as the de facto federal age of consent. Therefore, I do not regard Epstein’s conviction for consensual sex with a 17 year old to be real rape. Immoral, sure, but not rape. The ever expanding definition of rape — rape-flation — is not good.

If Freddie happened to read one of my 700 articles, it probably wasn’t about AI. It’s understandable why he would assume I was just another maximalist.

I attack Epstein skeptics because it makes me feel intellectually and morally superior. I feel proud to be in the 1% of free-thinking humans who thinks that Bill Gates is profoundly moral compared to the rapacious elites of yesteryear.

Maybe Freddie feels that way when he reads AI maximalists — a contrarian defying the crowd. But it also sounds like he is getting a bit exhausted and frustrated, to the point where he is ready to drop this topic entirely.

Even if Freddie doesn’t have the willpower to read yet another article on AI, it is still worth it for me to respond, because I think there are many people who hold Freddie’s views and are stuck in a false binary.

If AI creates 18% unemployment, that would be highly unusual and politically stressful. Under COVID, Trump printed more money than any president ever before him — then Biden did the same exact thing all over again, creating massive inflation.

I expect, at minimum, that AI will have similar effects. However, I’d prefer to measure AI by share of GDP, rather than any of the econometric data that Freddie suggests.

If you have fully understood the implications of this sentence, nuclear explosions should be going off in your brain right now.

In my article on TND, I claim that most people in the future will not work with AI — just the opposite. There is no reason why a caretaker or construction worker needs to be using AI, and that’s where I predict the greatest jobs growth.

I am also skeptical that the soldiers of the future will be hooked up with expensive AI night-vision goggles — low tech, poorly equipped mercenaries are a more likely future. AI does not help you run faster, shoot straighter, or dodge bullets.

In passing, I will comment that profound indifference to material change could inspire a sympathy toward Marxism, which is not very good at producing wealth.

If you want to see an epic animation of what trillions of dollars of cables looks like, I don’t have that, but I do have this humble animation.

I make the complementary argument in my TND article, that AI doesn’t need to be as smart as us, just magnitudes cheaper. Here, I’m saying that if performance is identical, cheaper human wages will make AI useless. If this point isn’t clear, it’s because there is a mouse running around in the kitchen and the ethics of killing it are consuming my brain cells. Sorry. Ask me in the comments to clarify anything that didn’t make sense.

Another possibility is that AI is just priced in. What I mean is that German GDP has been *falling* between 2023 and 2024, and only grew by 0.2% in 2025. We take the idea of infinite GDP growth for granted but maybe GDP growth is not automatic and happens because of constant development. Thus AI is required to merely sustain GDP growth, and the baseline to compare the impact of AI to is a timeline where GDP growth halts entirely as it did in Germany

I had the same basic take as you. I don't disagree with Freddie's fundamental premise, but a couple of his metrics for proving things were still basically "normal" were wildly off base. Worse than the 18% total unemployment was the 35% professional/business services unemployment. If we got even 20% unemployment among that specific class, a second great depression is inevitable. That class of people (roughly the top 20% in income) do 60% of all consumer spending in our economy, pay the vast majority of all income taxes, and hold 70% of the nation's assets. If we got anything remotely approaching 35% unemployment, all their spending will screech to a halt (even by the remaining ones with jobs, who will all fear for them), the tax base goes poof, and they will crash all asset classes in a sell off. When their spending stops, everyone else loses their job too. There is zero way to avoid a Great Depression (a worse one, actually) if a third of the high income professional class that do all the spending and pay all the taxes are out of a job, other than massive redistribution and socialism. That would be a massive change. So I agree with him that the kind of stuff he's thinking of is unlikely to happen, but that metric is crazy. We could have 34% white collar unemployment and he would still win the bet and say things are normal. A second (even worse) great depression and/or straight socialism are NOT "normal" for the US!