ai is juicy.

third wave industrialism.

This article was requested by Terrance, a paid subscriber. If you’d like to request your own 5,000 word article, become a paid subscriber today.

The usefulness of AI is determined by three things:

Precedent and repetition: is the desired result a trivial permutation of existing data? Or is the desired result “truly novel”?

Availability: is the source data publicly available?

Risk: is a negative result catastrophic, or inconvenient?

AI depends on a massive semi-open data source: the internet. Users generate data for free, and post their data semi-publicly. Data which is supposedly “private” is open to Apple, Facebook, and Google. They scrape direct messages and search queries for useful data to feed to AI.

It takes approximately 40 oranges to make a gallon of orange juice. Similarly, AI requires a vast amount of data input in order to generate useful outputs.

Most data on the internet is an image, text, or video. Outside of these three domains, AI is not as good at driving cars, planes, or trains, because there is less data available, and because a negative result is catastrophic.

There are many AI optimists who believe that AI (with enough time, firepower, data, and tinkering) can begin producing novel solutions to novel problems. There four approaches to this claim:

Hard no: AI might seem to produce novel solutions to novel problems, but these only “seem” to be novel. True novelty is not achievable by AI. All it can do is juice existing oranges — it cannot cultivate new species of oranges. AI can only compile pre-existing data and regurgitate it in juicy form.

Soft no: It is possible that with enough data, time, firepower, and tinkering, AI might successfully come up with novel solutions to novel problems. However, the amount of money and calories that would be required to run such an AI will always exceed the amount of money and calories that could be paid to a human to solve the same problem.

Soft yes: There are specific cases where AI could come up with novel solutions to novel problems with less money and calories than a human. However, humans will always be needed to prompt-engineer the AI. Without human assistance or cooperation, AI will lack the independent will to solve general problems. For example, an AI could help develop a better version of a machine gun, but it could not win a war.

Hard yes: AI will eventually solve all problems more cheaply than humans, without human assistance or intervention. Human cognition offers no advantages over AI.

For military reasons, “hard yes” is highly unlikely. “Hard yes” underestimates, misunderstands, ignores, and neglects the advantages of carbon-water systems. The difference between “hard no” and “soft yes” lie on an ambiguous or subjective spectrum, depending on how the question is phrased and how the result is judged.

In this essay, I will specifically address the impact of AI in four areas :

Military;

Academia;

Politics (wokeness);

Economics and manufacturing;

Geopolitics.

All of these topics are fairly intertwined, but I will try to deal with them separately.

silicon vs carbon-water:

If AI is capable of solving novel problems with novel solutions, this will likely be incredibly expensive compared to human cognition. People underestimate how cheap it is to produce a smart person. DNA and sperm are basically free, so the only costs are the egg and the womb. Surrogacy is $100k, and the cost to raise and educate a person is between $200k to $400k. Supercomputers cost millions, if not billions, of dollars. The energy cost to run a super computer runs in the millions per year. It is much easier to find an existing smart person and pay them millions of dollars to solve complex problems.

Supercomputers aren’t bad or a waste of time. They are extremely powerful in the hands of smart people at solving repetitive problems, like running simulations and scanning large amounts of data. But they aren’t going to outcompete or become more efficient than human biology at creative thinking. If it was truly possible to create a supercomputer for under $200k and to run it on $100k worth of electricity per year, such technological advances would quickly allow us to biologically augment and refine genetic technology. In the arms race of DNA vs silicon, DNA will always win.

Evolution is… an evolutionary algorithm. Evolution is artificial intelligence. Artificial intelligence ran for billions of years and created humans. It is possible to marginally improve genetic biology with the help of AI, but the basic structure of the neuron is already highly efficient and tested. It would be much easier and cheaper to genetically modify humans to grow brains in jars than it would be to create silicon-based super computers capable of greater efficiency than humans.

Take the top 1,000 smartest people in the world, and clone them a billion times. That would be cheaper and easier than trying to reinvent the wheel with a supercomputer that is meant to mimic the structure of the brain and somehow improve on it.

Again, supercomputers will be useful at solving specific repetitive problems, but they will always fail against humans when facing generalized, open-ended, or creative problems. If I am wrong, and AI is cheaper and more efficient than human brains, it would still make sense to invest in carbon-water brains rather than silicon ones, because carbon-water brains have military advantages.

military applications.

Consider a pregnant woman. She can walk around, move, and do limited work. It is probably possible to genetically shift the time of pregnancy and speed up the process by massively increasing metabolic processes. Women could eat 9,000 calories a day (9 pounds of beef) and have a much quicker pregnancy. Children could be born “premature” in a fetus form, and finish their development in an artificial mucus sack. All of this is fairly mobile.

Marsupials give birth to what is essentially still a fetus, then carry it around in a pouch. Humans could be genetically modified to be born at 3 months old, and then be stored in pouches and carried around.

The advantage of this is that instead of having a massive factory which requires massive amounts of energy to produce supercomputers, and is extremely vulnerable to EMP attacks and hacking, a human being can be produced anywhere, anytime. The human maturation process could easily be sped up by reducing the physical size and strength of the human body. By eliminating the growth of arms and legs, human brains could reach full maturity after five years of growth, with high enough metabolic gain.

Whales, which have enormous brains, can reach maturity after four years. The human brain is fairly complex, but with enough metabolic gain, this is possible. AI-bulls claim that we shouldn’t underestimate the potential of AI, but they simultaneously underestimate and dismiss the possibility of human metabolic engineering.

If adult human brains can be produced in four years on a diet of 9,000 calories a day, that’s 13,140 steaks over four years, or roughly $131,400. This is assuming $10 per pound of steak. If we can create some kind of soylent-style meal replacement shake that can provide the same energy and nutrition for $0.10 per pound, then it would only cost $1,314 to create a human brain in four years. Billions of brains could be farmed very cheaply and solve complex problems with much less energy input than billions of supercomputers. Silicon technology is more energetically expensive than carbon-water brains.

Neon Genesis Evangelion is an accurate depiction of the future. “Angels” will be developed with skulls which open and then fuse shut. The skull will open up and have an attachable brain stem. The brain will be placed inside the skull, and then “pilot” the body. These skulls will be reinforced with Kevlar and carbon nanotubes to provide the maximum protection against bullets. These bodies will be optimized for all sorts of things, including speed, strength, endurance, and regenerative ability.

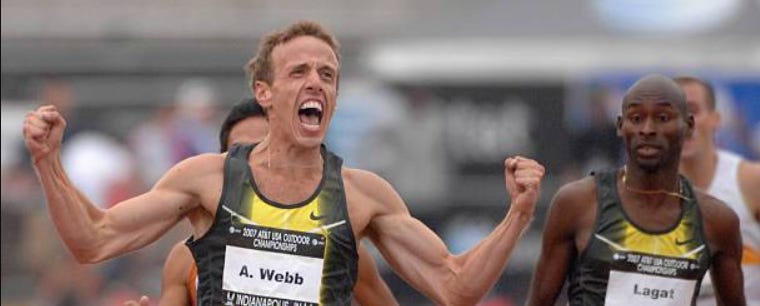

1,755 athletes have run a 4-minute mile. Bipedalism probably isn’t the most efficient means of gaining top speeds, but it is good for long-distance walking. With genetic engineering, many more barriers can be broken. There is no reason we can’t modify a cheetah to be capable of holding a human brain in a kevlar skull and running 100 miles per hour and then ripping a target apart with claws and teeth, or firing some kind of explosive device at a target, or running suicide-missions. Birds can also be modified to hold human brains and travel 300 mph.

Humans can inhabit bodies which travel thousands of miles, non-stop, on very little calories. The advantage of these bodies is that they can be mass produced in a decentralized manner and they are not vulnerable to EMPs. They can also be more easily refueled. Robots and drones either run on oil or batteries, which are relatively cumbersome. Oil is produced in huge refineries and oil fields which are subject to attack, sabotage, and hacking. An oil field can be disabled with a single attack. But producing a steak and transporting it is decentralized. The military has already produced MRE rations which are light-weight and can last five years.

If we convert between food calories and electrical energy, there are about 2 calories in a AA battery. To run a car for a mile, you would need 4 million batteries.

Car batteries are more efficient than AA batteries, but they are quite heavy. Regular car batteries weigh 30lbs, and electric car batteries weigh up to 2,000lbs. In comparison, 9,000 calories of steak weighs 9lbs. Carbon-water beats silicon in terms of being light-weight, simple to refuel, mobile, and resistant to hacking and EMPs.

Carbon-water bodies are easier to heal and repair than silicon bodies. There is no reason to reinvent the wheel with nano-technology when cell-based systems already exist to tinker with. Lizards can regrow tails, and there is no reason why carbon-water bodies cannot be engineered to regrow arms or legs. These repair processes require no external aid besides steaks or soylent.

It is true that carbon-water organisms require large amounts of water to function, but outside of desert environments, water is easy to find and purify.

My criticism of AI supercomputers and silicon-mechas is that they fail to perform autonomously and under decentralized, light-weight conditions. Each mecha requires huge factories, oil fields, refineries, nuclear power plants, rare earth minerals, uranium, thousands of pounds of batteries, barrels of oil, refueling, repair centers, recycling centers, and so on. All of this is vulnerable to sabotage and attack. Give some cheetahs, superhumans, wolves, or great snipes some C4 or suitcase-nukes and they can take out the most important infrastructure. Heavily guarded infrastructure is vulnerable to EMP and hacking.

Autonomy is important for warfare, and this is something that AI-bulls do not consider. They believe that the future will be governed by an ultra-stable global government. The future will resemble ancient Scandinavia, with thousands of tribes launching yearly wars on their neighbors. You don’t want to be tied down by oil refineries, nuclear power plants, factories, command centers, or thousand pound batteries.

In the near term, I don’t think aircraft carriers are useless against drone attacks or hypersonic missiles, because global military campaigns require transportation of tanks and planes, and aircraft carriers are good at transporting tanks and planes. However, the basic principle here is that producing a swarm of very cheap drones is superior to creating one giant behemoth mega-weapon. This applies to the conflict between AI and biotech. AI is dependent on large data centers, supercomputers, massive amounts of energy, and centralized logistics and supply lines. Biotech is autonomous, cheap, reliable, self-repairing, light-weight, mobile, and independent.

The internet could be shut down tomorrow by snipping a few undersea cables. These cables stretch for thousands of miles and there is no way to protect them. Satellite internet is more resilient, but space warfare has never been tried. Space drones can cheaply knock out all the satellites in orbit. Space warfare will make the internet unusable without super-heavily defended mega-satellites, or extremely cheap micro-satellites which can evade detection. Like the aircraft carrier analogy, large satellite systems are vulnerable to nuclear weapons.

As a result, supercomputers will have to rely more on native servers rather than decentralized internet connections. Supercomputers will exist in extremely large compounds which will be vulnerable to infiltration and sabotage.

Nukes will always be cheaper than defense systems. It doesn’t make sense to invest trillions of dollars in large, easy-to-hit targets. What makes sense is to invest trillions in an army of genetically modified monkeys which can seek and destroy targets with explosives. Make creepers real, but with the speed of cheetahs.

Carbon-water lifeforms combine their weapons technology with their production technology. All you need is two animals in order to create more animals with se

There are cases where silicon technology beats carbon-water, but silicon cannot beat carbon-water in all cases. Nanotech is a hoax insofar as it commits the same mistake, pretending that small silicon robots will always outperform self-replicating carbon-water life. Nanotech attempts to reinvent the wheel.

Genetically modified bacteria, viruses, and insects will all be more effective in military application than “nanobots.” Sufficiently advanced nanobots are indistinguishable from carbon-water bacteria-viruses. There is no reason to produce nano-bots in easy-to-target and easy-to-sabotage factories when DNA already decentralizes the reproduction process to each individual cell.

academia.

AI is blowing humans away at completing standardized tests, especially multiple choice quizzes. How students perform on homework is a function of their ability to cheat (undetected) with AI. AI does the homework for the students, then AI grades the homework for the teachers. Is anyone learning from this?

Sebjenseb has written about the impact of AI on education. Educational institutions are not methodologically flexible or progressive. They do not respond to changing circumstances by increasing the difficulty for students to compensate for failing measurements. Their incentive is the exact opposite: to serve as a diploma mill.

This isn’t a historically novel problem. In the 11th century, Gregorian reformers were upset that virtually anyone could become a priest. You could be a married man or an imperial loyalist. Priestly titles, like the bishopric, were bought and sold. Married priests favored their sons and advanced nepotism within the church. Gregorian reformers saw nepotism and bureaucracy as a corruption of the priesthood. They wanted a pure, meritocratic, and holy order.

The problem of academia is not framed in Christian terms, but shares many of the same concerns. Diplomas are bought and sold, rather than earned. People cheat with AI, and anyone can become a graduate of anything. IQs are dropping, along with competence. Cheaters win, while academic honesty is punished. This isn’t just a problem with AI, but a cultural problem generally. Professors are unwilling or unable to subject students to cheat-proof exams, like oral exams. The victimary bureaucracy denounces oral exams as “discriminatory” against protected classes: non-native English speakers, or victims of anxiety disorder, for example.

the job market.

As college degrees become more “corrupt” and less rigorous, employers will have to adapt their hiring process. As the value of a college degree decreases, employers will be forced to adopt more rigorous forms of testing in order to screen employees. This includes standardized testing, psychometric testing, and multiple rounds of interviews.

The trait which employers value most besides intelligence is conscientiousness. Conscientiousness is very difficult to test for in the short-term. It is possible that genetic tests or brain scans could be used to project a person’s level of conscientiousness, but these are likely to be opposed as discriminatory.

As employers are less able to test for conscientiousness, they will be forced to rely more on work history as an accurate measurement of conscientiousness over time. Did your employee show up on time? Did they do their work, even when no one was watching? Did they submit reports on time? Was their work thorough and complete?

Corporate HR teams may begin to hire third party platforms to streamline this process. Each employee can be given a score by an employer, which then is uploaded to a social media website like Indeed or LinkedIn. This score is like a Yelp score, but for an individual employee, rather than for a business. This is roughly equivalent to a privatized version of China’s social credit system.

The impact on employees will be disastrous and humiliating. A single bad review from an employer, especially early on in your career, will destroy future prospects. Not going to college severely limits the options of women in the workplace (absent the male-dominated visual-spatial trades). In the same way, not being a slave to your employers will destroy a person’s “employee credit score.”

This won’t benefit employers. It is an attempt to compensate for a loss of selective efficiency in academia due to corruption. Academia in 1950s America (when the professoriate was experiencing a communist revolution) was far superior in selecting for competence than academia today, and things will become exponentially worse in the coming decades. The employee credit score would be a flagging attempt by corporations to make up for the loss of academia as a credible system. Such a future will be worse for employers, and much worse for employees.

As late as the 1990s, it was possible to scream at your boss “I QUIT!”, storm out, move to a new town, and get a new job. Employees had dignity and respect. As academia has become increasingly worse, employers are able to treat their employees worse and worse. Unpaid internships, multiple rounds of interviews, and conscientiousness-selecting Byzantine rules have replaced workplace dignity.

The HR labyrinth is a means by which corporations select for conscientiousness. Individuals who are low in conscientiousness will refuse to put up with degradations and humiliations.

HR risk avoidance.

This is not just a product of academia, but the state of civilization. In a high complex civilization, risk is exponentially amplified. In a low-complexity civilization, risk tolerance is higher. Perhaps this is why the horse was domesticated outside of civilization.

The greatest level of risk that corporations are exposed to is social risk. John Schnatter was a billionaire; when he used the “n-word,” he was fired from the company that he built. Schnatter claims the word was used within the sentence, “What bothers me is Colonel Sanders called blacks n******. I'm like, I've never used that word. And they get away with it.”

In the case of Schnatter, he alleges that a PR company, Laundry Service, conspired against him with other members of the board. Their intention was to remove Schnatter from leadership so that the company, whose stock prices had fallen precipitously, could be free to pursue a new strategy.

In either case, the fact that Schnatter felt the need to resign because of his “exposure” demonstrates the power of bad PR, or low conscientiousness. In an interview, Schnatter expressed regret over using the racial slur, rather than just saying “n-word.” This demonstrates low social conscientiousness on his part.

One way to describe wokeness (or moralism, or leftism in general), is as the extreme policing of social conscientiousness and conformism, with ever-evolving and shifting semantic games. As universities lose the ability to test the competence of students, they will double down on wokeness. As corporations become increasingly skeptical of universities, they will also double down on wokeness as a means to select for social conscientiousness. Wokeness is a proxy for the willingness of a person to performatively signal their loyalty to corporate America.

AI is not the only force driving this process, but is only one part of a collapse in standards and social trust. Ironically, it is the field of software development generally that allows the greatest freedom of thought regarding social conscientiousness. This may partially be due to the fact that software development (unlike medicine, biology, law, or finance) has a relatively low verbal-tilt. Those with lower verbal tilt are less able to performatively signal their social conformism.

It’s not that computer nerds are more masculine, more Christian, or more conservative, but that they are more frustrated with using social conscientiousness as a proxy for employability. They don’t feel that social conscientiousness correlates with competence, and in fact, displays of social conscientiousness are generally a means of covering up a lack of competence (midwits).

Even if you don’t “lose your job to AI,” AI could still accelerate a process of bifurcation within the corporate world. On the one hand, some corporations may double down on social conscientiousness, forcing employees to perform their social conscientiousness with increasing intensity and frequency. On the other hand, other corporations may figure out increasingly invasive or humiliating alternatives to social conscientiousness, in the form of multiple waves of interviews, unpaid internships, and an “employee credit score.” This will limit the freedom, dignity, and autonomy of white collar workers. It will no longer be able to quit a job cleanly and try something new, because the “employee credit score” will follow you everywhere.

There is an optimal level of social conscientiousness determined by market demand. Screaming the n-word is not rewarded by customers. But forcing employees to put their pronouns in bio is also not rewarded by customers. Whether the market will be able to incentivize this optimization depends on the sensitivity of exposure.

An entertainment company like the NFL is much more sensitive to exposure than an accounting firm. As a result, the NFL has actively signaled its support for BLM, despite the fact that this was undesirable on the part of the audience. Accounting firms have much less social exposure, and are less likely to socially signal.

Luxury brands like Jaguar, which do not sell performance, but status, are sensitive to competition in social conscientiousness. Education is a product or service, but since it is increasingly difficult to measure the “performance” of education, it is subject to the greatest competition in social conscientiousness.

AI will not render it impossible to judge competence, but it will add to increasing confusion and social taboos surrounding competence. It will create a period of disruption that will amplify existing trends. Eventually, however, accurate tests of competence will return, either through re-born educational institutions, or parallel corporate structures.

geopolitics and automation.

I have predicted that the AI revolution will not be good for China. This is because I believe that AI will amplify the effects of automation. China has an advantage in automation, first because of state policy, second because of cheap labor, and third because of skilled labor.

First, the Chinese forcibly industrialized their country with communist tactics. Second, they built an industrial base by providing cheap labor for America and Europe. Third, they educated their population in math and science to facilitate automation.

However, building a robot to build a car is a solved problem. Why can’t a robot build an entire car factory with no human intervention at all?

It may be more difficult to build self-driving cars than to build self-building factories. In this sense, I am referring to a self-building factory as an AI which acts as a construction site manager and tells low-skilled construction workers what to do. “Dig here. Place this here.”

China has built bridges and trains and skyscrapers, and all of this construction has required skilled engineers with advanced degrees, who create plans and submit these plans to construction managers, who oversee a team of machine operators. But if AI could plan out the construction of bridges, trains, and skyscrapers, then an entire class of experts is no longer needed.

I predict that in the future, China’s massive investment in engineering skills will be disrupted. These workers will not become useless, since their intelligence is useful in other fields. But the task of building a factory, bridge, train, or skyscraper is not novel, and can easily be solved with AI. It requires some problem-solving ability, but it is no more novel than the task of driving a car down the street. It is more complex, but not more novel. Building factories, planes, trains, bridges, and skyscrapers is something that could be completely overseen by AI, with the actual execution of construction being done by low-skilled workers.

wave 3 industrialism:

In the past, China had a huge workforce of cheap labor, as well as a class of skilled engineers who directed those laborers. This gave China a competitive advantage in manufacturing. However, as AI degrades the importance of structural engineers, manufacturing ability will become more a function of labor availability than of technical expertise.

AI will become better than humans at low-level coding, because much of coding is rote memorization of syntax. Instead of learning how to write “hello, world!” in Python, you can just ask AI to do it for you. But while AI knows how to code, it doesn’t know what to code. It can’t determine which problems are worth solving. If you ask an AI, “what should I code?”, unless you provide it with a very specific prompt, “display hello world”, it will be unable to answer.

There are 289 million Chinese citizens under the age of 18. This represents 16.48% of the total population. In North and South America, there are 220 million citizens under the age of 18, which is 21.52% of the population. In the EU, there are 79 million children, which is 17.61% of the population. In total, North and South America, plus the EU, have 298.6 million children, or 20.32% of the population.

Already, “NATO+” has more children than China. It is only a matter of time before this advantage makes itself apparent in the size of the working-age population. China’s two-child policy is not going to change this — it only slows down the process, but does not reverse it (2 children is still below replacement). In order for China to reverse this trend, it must enforce a three-child policy. Theoretically, the three-child policy was enacted in 2021, but this does not seem to have had any appreciable effect on the Chinese birth rate, which is still decreasing. Authoritarian communism has failed, for now. Maybe if China starts torturing and killing women for not getting pregnant at age 16, something might change. We will see.

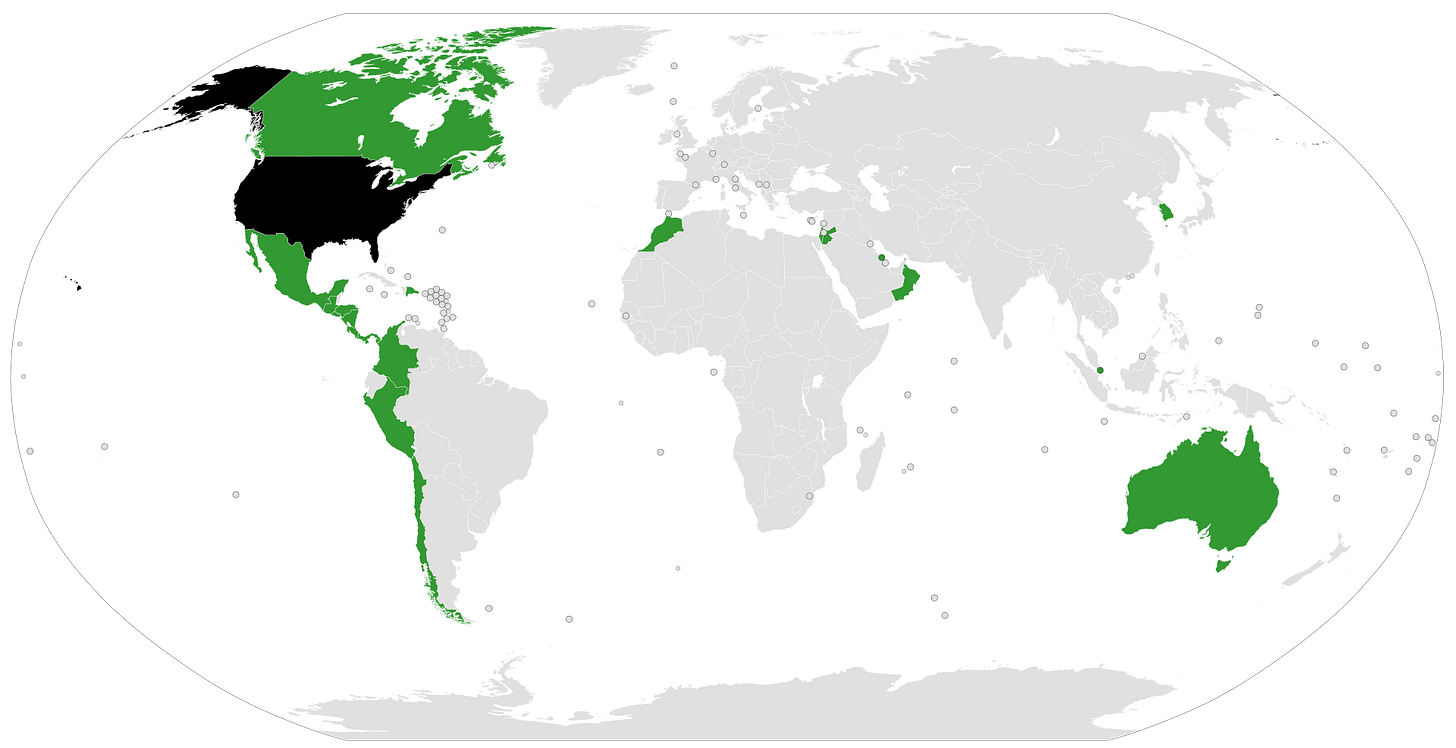

The concept of “NATO+” depends on the ability of America and Europe to absorb Latin America within its economic orbit. This has already been accomplished to a large extent in Mexico, Chile, Peru, Costa Rica, Dominican Republic, El Salvador, Guatemala, Honduras, and Nicaragua. Argentina under Milei is moving closer to America, and Brazil und Bolsonaro seemed to be making the same advances, although this process has been interrupted by Lula.

The greatest obstacles exist in Venezuela, Cuba, and Bolivia, but these are not at the scale of Iran or Russia. These countries do not possess nuclear weapons, and Venezuela is only a third of the size of Iran. Politically, the 2024 election demonstrated that Hispanics can assimilate politically into the American system.

If America and Europe are able to integrate Latin America into an economic zone, then within 40 years, the Chinese advantage in labor availability will disappear, and NATO+ will have a larger working-age population. The Chinese advantage in mathematical intelligence will not confer a greater advantage in manufacturing capacity, since manufacturing will become a brute-force sheer output of the working-age population, rather than technical expertise.

In this sense, China’s rise, from 1990 to 2020, was largely fueled by an economic transition zone between Wave 1 industrialism and Wave 3 industrialism. Wave 1 involved low-skilled workers on assembly lines. Wave 2 involved increasing automation and the use of robots, designed by engineers. China excelled in Wave 1 and Wave 2 because of its large workforce and its engineering skills. However, during Wave 3, engineers will be replaced by AI, and workforce size will become more important than mathematical engineering ability.

This doesn’t mean that mathematical ability will cease to have any significance in any field, but that it will largely be redirected toward biotech, as manufacturing becomes a trivial task from a managerial standpoint. The Chinese will have to dramatically shift their economy away from manufacturing and toward biotech if they wish to remain competitive. So far, the Chinese have not demonstrated their ability in this regard.

In this sense, AI will represent a paradoxical shift backwards in the field of manufacturing, where unskilled labor (trades) becomes more important than skilled labor (engineers). This isn’t a universal statement. In biochemical technology, skilled labor will still be necessary to make advances. However, building cars, planes, trains, bridges, and military drones is mostly a solved problem with very little room for improvement. Marginal gains can be made, but these cannot compare with the astronomical advances which are yet to be made in biochemistry.

This doesn’t necessarily mean that China will collapse entirely in 40 years. Rather, it is more likely that China enters a period of stagflation, similar to what Japan experienced in the 1990s. It will not lose its absolute power or shrink economically, but it will not be able to sustain its current levels of growth.

A Pew Study of immigration in 2022 found that 90,000 Chinese citizens immigrated to the United States, which is much larger than the number of Americans who move to China. If China relaxed its immigration and citizenship requirements, it would face the challenges of multiculturalism. It is unlikely that China can absorb more immigrants than America. It cannot close the widening labor gap, and it will lose the third wave of the industrial revolution.

Summary:

AI has limited military applications, and is inferior to carbon-water biotech in most cases (the exception being space warfare).

AI is going to increase performative virtue signaling in colleges and corporations. As selection for competence becomes less efficient, academies and employers will place more emphasis on displays of social conscientiousness. This will reduce overall economic efficiency and lead to a temporary slow-down of the global economy, but will eventually be overcome as the benefits of AI compensate for decreasing competency.

Companies will attempt to compensate for the lack of efficiency in competency testing by investing in more psychometric testing. They will also subject employees to increasingly tyrannical and dystopian conditions, an “employee credit score.” Regular employees will experience the freedom and autonomy of Roman-era slaves. It will no longer be possible to quit a job and move on with your life, since the “employee credit score” will follow you wherever you go in the world.

AI will replace the engineering class, reducing China’s manufacturing advantage. Manufacturing will rely more heavily on labor availability as opposed to engineering ability. NATO+ will exceed China in production capacity once factory production becomes fully automated by AI. Manufacturing will no longer require high-skilled mathematical ability, but low-skilled construction crews managed by AI bosses.

Yes, I imagine the only reason we imagine a cyberpunk future instead of a bio-punk future (think: Yuuzhan Vong, Ayleids, AOT Titan “mechs”) is because we basically have free range to innovate with silicon intelligence and extremely limited range to innovate with carbon-water intelligence. Carbon is seemingly the most versatile element in the universe. It can be used to create life, it can naturally (with other organic compounds) form into rich energy sources (natural gas isn’t necessarily from dead life, there are moons with seas of hydrocarbons), and the strongest substances are made of carbon (Kevlar, diamonds). The main mechanical issue with carbon-based life is that it requires relatively inefficient forms of energy consumption. You can’t just plug it in. It needs other nutrients as well and especially oxygen to exist, which in large animals requires certain fragile systems. It’s easy to give people Kevlar skin, it’s a lot harder to give them Kevlar organs. It’s possible that with a good enough grasp on biology, humans could “go anabolic” and live off strange means like extremophile microorganisms. The problem is, like I said before, biology marches at an extremely slow pace due to regulations in testing and sale and the tediousness of long-term studies.

I’m not sure how far we can get with regeneration from just carbon. There is a huge benefit to “wireless” life, it can do things faster and with more complex coordination. But as you point out, this would be rendered vulnerable to wireless attacks. Chemical agents can be used against humans but you can’t hack a human. The only solution to this I could see is something really advanced like relying on quantum entanglement but this is a lot to ask when quantum computing is already proving to be a bust.

I strongly disagree with your take on silicon vs carbon water cost. You totally miss that human cost is a constant, while silicon has been improving exponentially for the past 70 years. The supercomputers of yesterday are today cheap gizmos and the equivalent will be true in the future.